How Nametag’s ID scanning process guides the user to success

A Communication Issue

In automated IDV, the key to success is getting a high-quality capture. But no matter the method of capture used, this doesn’t always happen on the first try. ID scanning isn’t as simple as receiving an image of an ID from a user and saying “yes” or “no” to it.

Typically, IDV providers look to one binary metric to measure their ability to capture IDs: “how often are we able to process a scan on the first try?” While this may be one indicator, it is often representative of how their process misses the bigger picture: an opportunity to work with the user to improve capture across several iterations. In fact, they rarely ask this fundamental question: “what are we doing to help our users get a better scan on the second attempt than they did on the first?”

A binary “yes” or “no” treats each scan attempt independently, even if they’re from the same user. Instead, the goal should be for each user to end up with a successful scan at the end of their journey, even if it requires several pointers along the way.

In this article, we’ll explore how Nametag’s Dynamic Scanning System uses on-device AI to teach the user to optimize their environment for the best possible scan. This not only means we end up with more successful scans at the end of the user journey, but also enables us to elevate our expectations for an acceptable scan, boosting security.

How Dynamic Scanning works

Let’s look at an example to see how this is done.

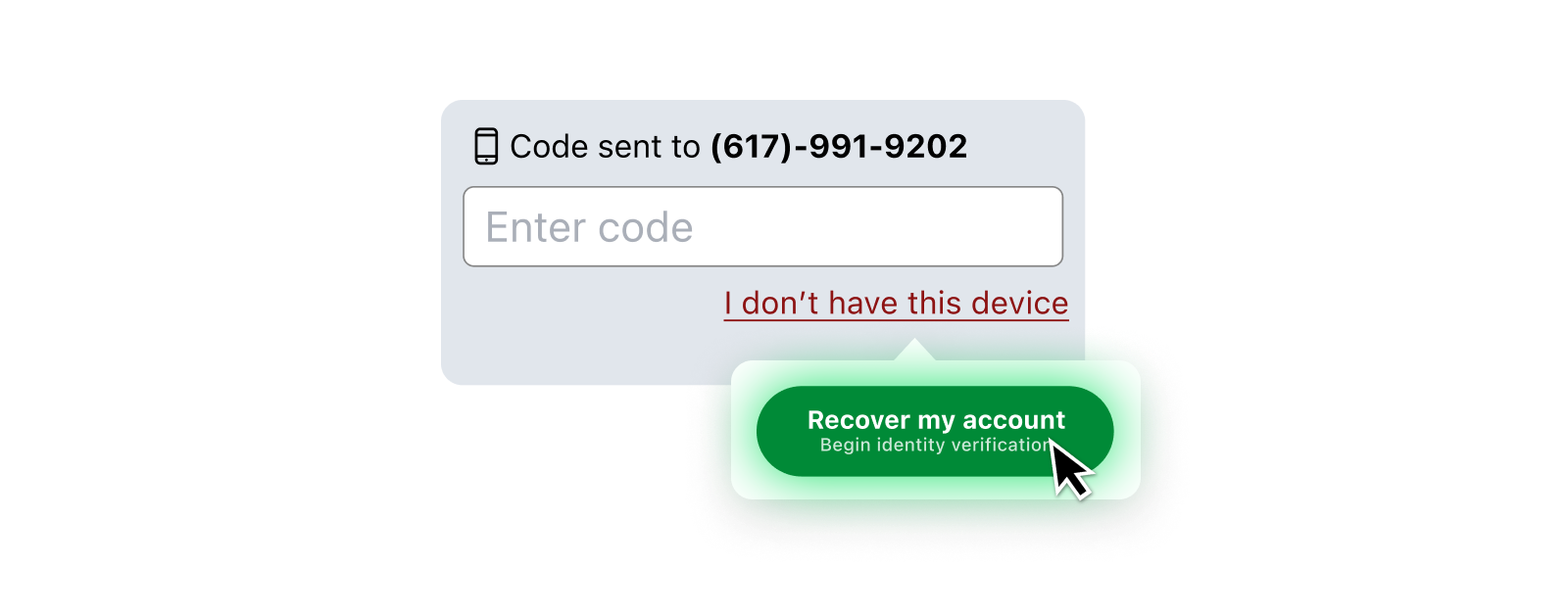

Arjun is having a bad day. After setting up his new iPhone, he mails in his old one for a trade-in discount, only to realize his Microsoft Authenticator account didn’t transfer. Now, he desperately needs to access his bank account, but finds himself stuck.

Fortunately, his bank uses Nametag Autopilot for account recovery. After scanning a QR code, he’s prompted to scan the front of his ID from his new device.

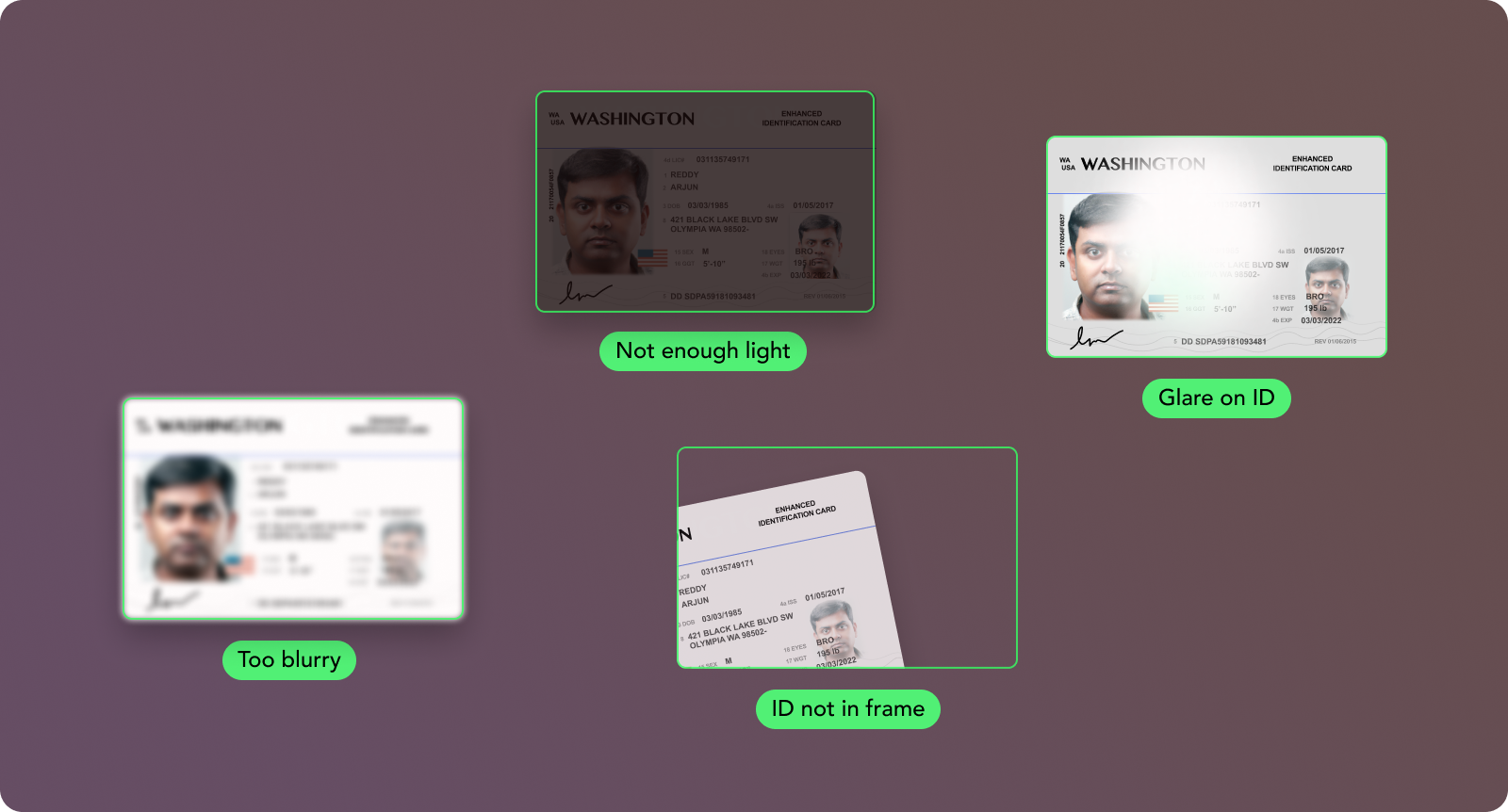

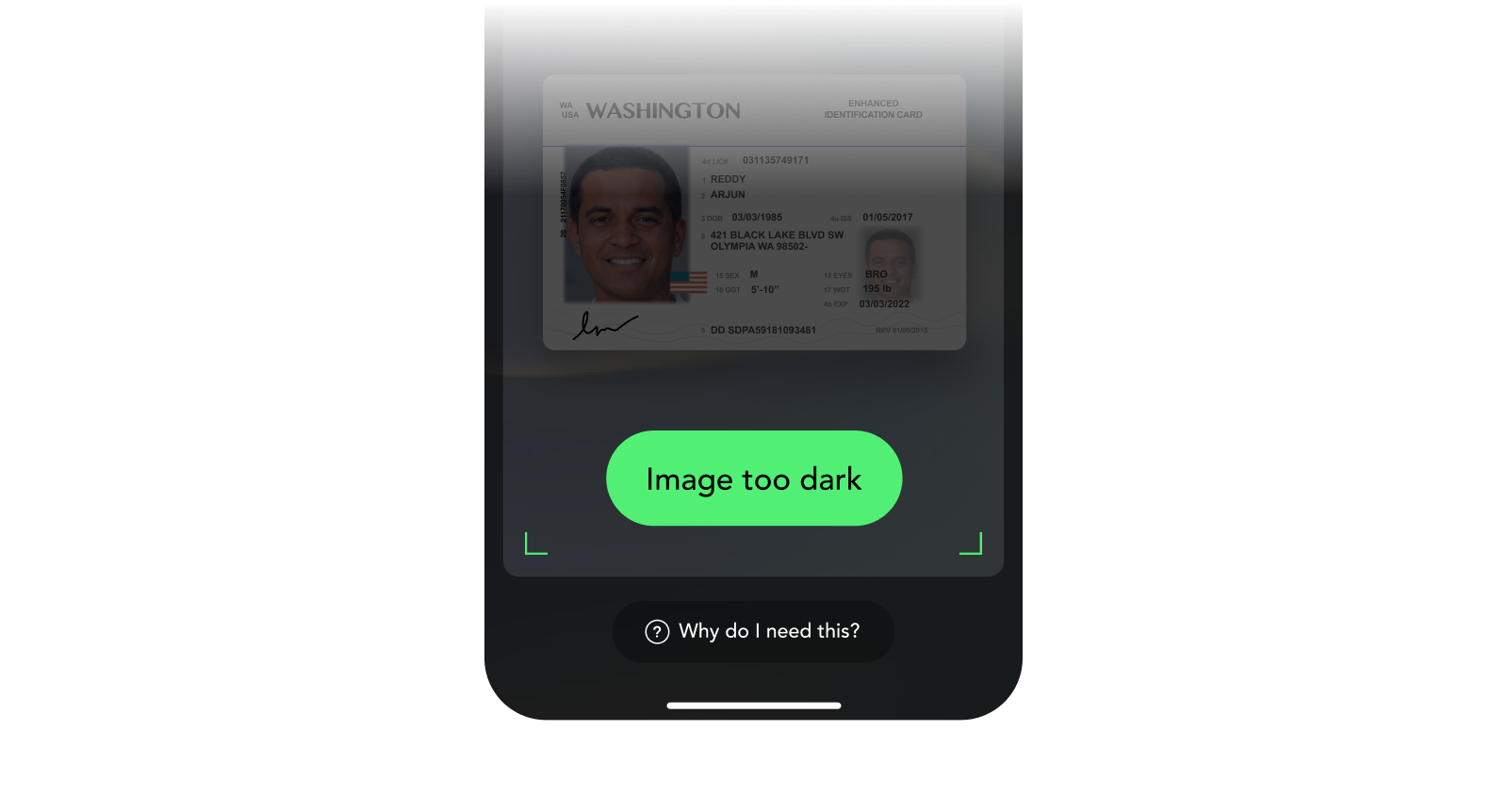

Here, Arjun runs into his first problem. He’s sitting in the dark. When he tries to scan his ID, he’s greeted with a helpful hint suggesting that the ID card does not have enough light on it.

He immediately turns on his desk lamp, but the glare reflecting off the ID from a few feet up renders it unreadable on camera. Instead of going ahead with the image capture, he’s again gently prompted with a hint: “Glare detected on ID”. He tilts the ID slightly to reduce the glare.

Now, Arjun has a new problem. He’s holding the ID too close, so that only half of it is within the frame. But Nametag simply tells him the “ID must be fully in view”, and he’s able to make the adjustment.

With three adjustments, this might seem like a lengthy process. In reality, however, this process still only takes a few seconds. The analysis happens on the fly, and Nametag’s auto-capture doesn’t move on until the on-device AI has verified that it has a good image. There is no waiting while the app contacts the server just to give the user feedback on something as simple as glare on the ID.

Globally-Aware Prompts

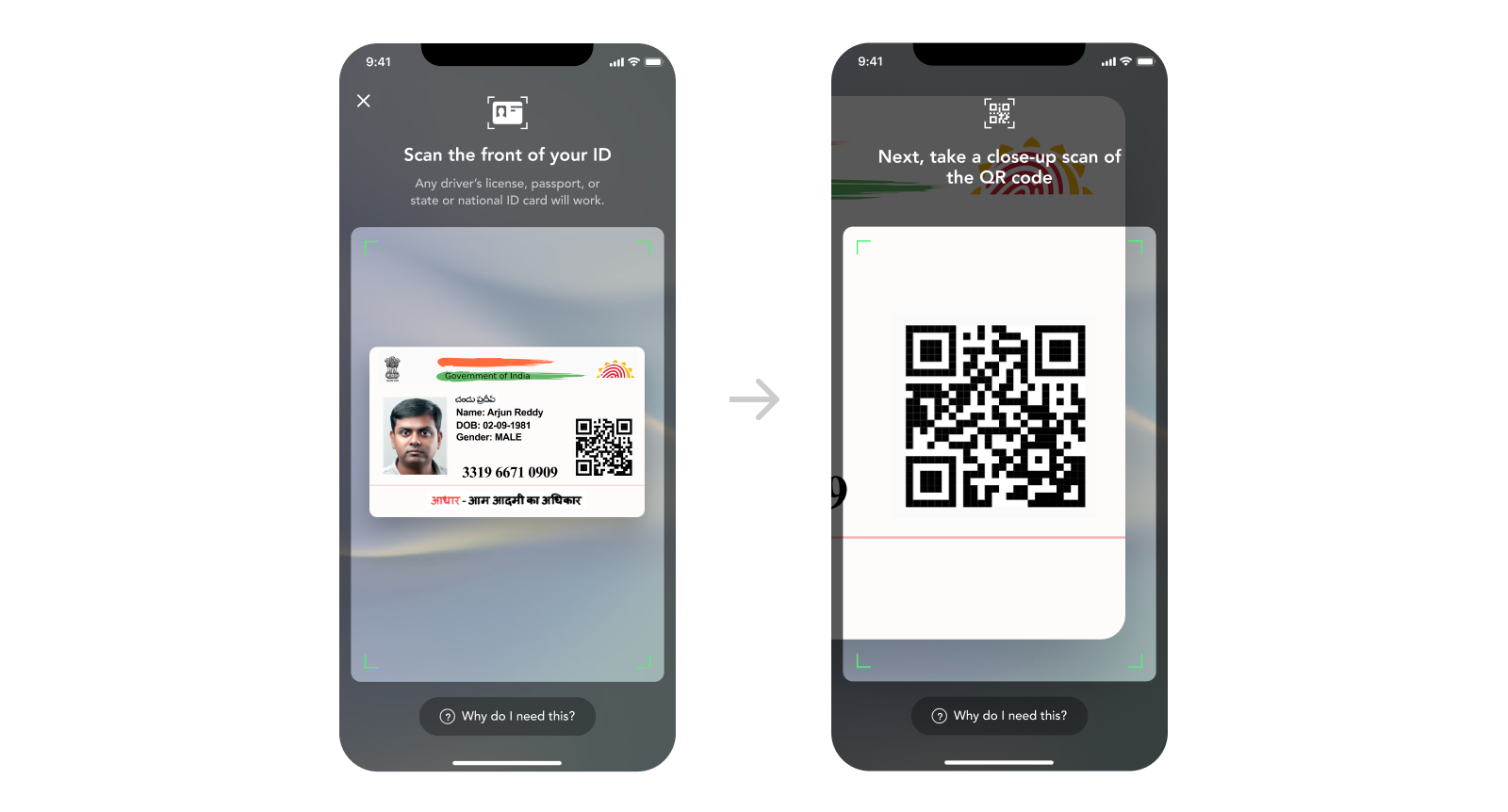

Finally, one last plot twist occurs: Arjun lives in Mumbai. He has an Aadhaar card — the standard form of ID for 1.3 billion people in India. Without Arjun ever telling Nametag to expect an Aadhaar card, the flow automatically adjusts. After scanning the front of his card, instead of being prompted to scan the entire back of his ID, he’s prompted to focus his scan on the QR code on his Aadhaar card. These tailored instructions guide Arjun naturally through the process, analyzing his ID in real-time and guiding him toward high-fidelity, machine-readable scans.

In reality, Arjun has barely scratched the surface of the possible detections, hints, and on-the-fly adjustments that make up Nametag’s Dynamic Scanning System, which also detects blurry images, images of cards taken on computer screens, and more. With Nametag, achieving a successful scan is not a guessing game. It’s a fluid and efficient process that achieves consistent results.

Unusual Cases

So Nametag has dozens of hint types, all of which involve unique computer vision models and recognition functionality. But what if, in a rare case, something goes wrong that Nametag doesn’t have a prompt ready for? If this happens, Nametag still creates opportunities for correction instead of forcing a user to give up.

If Nametag doesn’t find a valid frame for autocapture, the user is then prompted with a manual shutter button to take a photo as they see fit. A key insight in reaching a successful scan is that, in these cases where ML cannot determine the source of the issue, simply surfacing the captured image back to the user can often result in an easy self-diagnosis.

Put simply, the issues most unique to the user’s current situation are often the ones they can most easily recognize on their own.

In Summary

Nametag turns users that would otherwise be examples of ‘failed capture cases’ into success stories, helping more users recover their accounts without the delay of manual intervention.

Schedule a demo, explore use cases, or learn more at https://www.getnametag.com.