Enterprises are embedding AI agents inside of critical workflows. But a major gap exists between agentic AI adoption and agentic AI security practices. An August 2025 survey by Okta found that 91% of organizations already use AI agents, but less than 10% have a mature strategy to secure those agents. And fewer than a third extend human-level governance to agent identities.

This situation is untenable. AI agents can now place orders, access sensitive data, and modify system-level configurations. But IT and security teams have little visibility into who’s really authorizing AI access and actions. Amongst practitioners, there is a deep sense of dread. Something has to change.

That’s why today at Oktane 2025 we're introducing Nametag Signa™, a new way to protect AI agents against misuse. Signa works with Okta to ensure that every AI action carries a trustworthy record of the human being behind that action.

Check out the press release and solution page, or keep reading to learn more. Then contact us to get started with Verified Human Signatures for AI actions.

“Nametag’s introduction of Signa and the Verified Human Signature marks a turning point in the conversation about agentic AI security.” – Aaron Painter, CEO of Nametag

Agentic AI Security is Inextricably Linked to Human Identity Security

To do its job effectively, an AI agent must have access to a wide range of external systems, applications, data sources, and even other agents. Granting this access can unlock huge productivity and efficiency gains. But it also creates opportunities for AI agents to be misused or manipulated.

This leaves CISOs and CIOs in a tough spot. On the one hand, you’re responsible for protecting your organization against breaches; on the other hand, business leaders are eager to deploy agentic AI across the company.

“Effective AI security requires verification of both the human and non-human identities associated with AI.” – Todd Thiemann, Principal Analyst at Enterprise Strategy Group.

Until now, the missing ingredient was assurance: a way to know with certainty not just what an AI is doing, but who is behind that action. When someone signs into an AI app, how do you authenticate that sign-in? How much assurance does that authentication factor really provide? And when an AI needs to access a resource outside of its trust domain, how do you know whether to approve it?

Bad actors have repeatedly demonstrated that they can compromise even the most phishing-resistant MFA. Issuing an OAuth token based on basic MFA assurance can lead to a disaster of over-privilege. Enter Nametag Signa.

By combining Nametag’s Deepfake Defense™ identity verification engine with Okta’s AI policy and governance engine, every AI access and action can carry an auditable record proving it was authorized by a real, verified person.

Introducing Nametag Signa™: Verified Human Signatures for Agentic AI Actions

Nametag Signa gives IT and security teams a reliable, trustworthy way to protect their organization against agentic AI misuse, enabling safe AI adoption. It uses our Deepfake Defense™ engine to create a cryptographically-attested proof of the human identity behind an AI action: a Verified Human Signature.

When an AI action carries a Verified Human Signature, you can trust that the action was approved not just by a real person, but by the right person. Signa offers a practical, pragmatic way to embed a new tier of identity assurance into agentic AI workflows, you can enable AI innovation without losing sleep.

“Solutions that embed human identity verification into AI workflows give enterprises a practical way to reduce AI risk without slowing AI adoption.” - Todd Thiemann, Principal Analyst at Enterprise Strategy Group.

Currently, Nametag Signa solves two critical AI governance challenges:

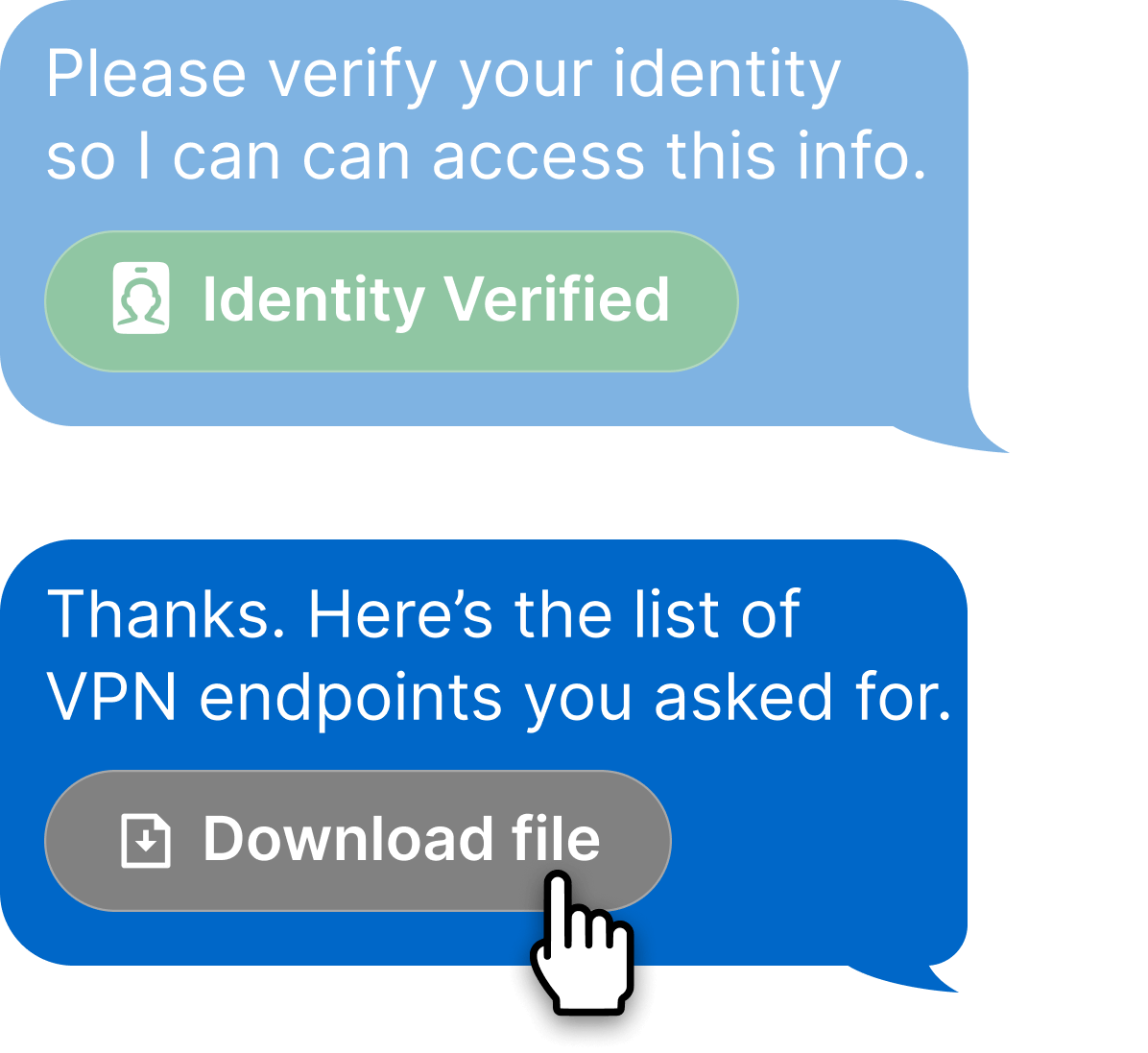

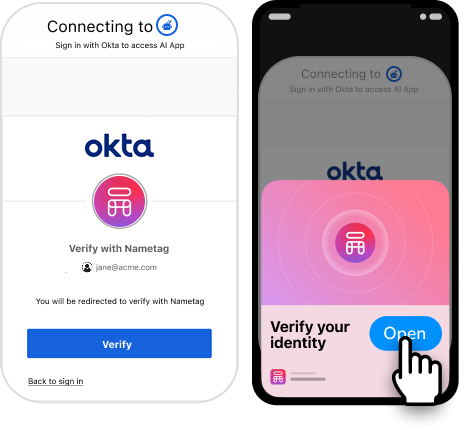

- Verified Human Sign-in for AI Apps: When a person signs into an AI app, Okta enforces authentication policies. Nametag serves as the MFA factor, ensuring that the person accessing the app is really who they’re claiming to be. This provides a verifiable record of who is behind the AI session.

- Verified Human Approval for AI Actions: When an AI attempts to complete certain actions, Okta policies trigger step-up authentication. Nametag verifies the human-in-the-loop before the action is allowed to proceed, creating an auditable record of who authorized the AI agent's action.

For your end-users, the flow is quick and non-intrusive. They’re simply prompted to authenticate via Okta, then take a selfie to reverify their identity.

Explore Nametag’s end-user experience →

How It Works: Nametag Signa for Okta

Initially available for Okta, Nametag Signa builds on our expanded integration with Okta to create an auditable chain of trust for AI agents as they access resources and perform actions. In essence, Okta acts as the policy engine for AI agents, while Nametag acts as the authentication and security layer.

Setting up Nametag Signa for Okta is straightforward. You simply use the Okta interfaces you’re already familiar with to create, configure, and assign policies to AI apps and agents which require verification via Nametag in scenarios which you define, such as granting access or changing payment information.

“Okta is a fantastic policy and risk engine for AI; Nametag is the best way to verify which human is behind an AI session or action. "Together, Nametag and Okta are enabling the secure adoption of AI across the enterprise.” – Aaron Painter, CEO of Nametag

On Nametag’s side, we empower you with a wide range of options to align with your organization’s security policies, privacy preferences, and requirements.

Configure Nametag Signa for Okta:

- Use our dev docs to set up Nametag as an Identity Provider in Okta.

- Configure Okta authentication, sign-in, and access request policies to require verification via Nametag in defined AI scenarios, such as signing in to an AI app or when an AI agent attempts to perform certain actions.

This approach aligns with emerging standards like Model Context Protocol (MCP), Agent2Agent, and Agent Payments Protocol (AP2) by combining Okta’s policy engine for AI with Nametag’s industry-leading identity verification.

Know Your Agent (KYA) vs. Signa

As agentic AI becomes more common, the idea of Know Your Agent (KYA) has emerged. Modeled after the Know Your Customer (KYC) checks required by anti-money laundering regulations, KYA aims to enable autonomous financial services by applying consumer verification practices to AI agents. But verifying an AI agent alone isn’t enough; you also need to know who is behind the agent.

KYA and Nametag Signa are complementary. Where KYA checks whether an agent is legitimate and authorized to perform an action, Nametag verifies that the human who’s using that agent is themselves legitimate and authorized.

A New Standard for AI Security

We see Nametag Signa and the concept of a Verified Human Signature as the beginning of a broader trend in AI security: embedding human identity verification directly into agentic AI workflows. Just as phishing-resistant MFA became the standard for human logins, cryptographic human identity assurance for AI is poised to become table stakes for enterprises.

Okta provides the policy and risk engine; Nametag ensures that the human behind each AI session or action is who they claim to be. Together, we’re creating the foundation for secure adoption of agentic AI at scale.

If you’re ready to protect AI agents with verified human signatures, learn more on our solution page, explore our other workforce identity verification solutions, and then get in touch to discuss your particular projects and requirements.

.png)