At Oktane 2025, the same two emotions echoed in every corner of the venue: security and IAM professionals are excited about what agentic AI can do, but they are dreading what happens if it acts without proper guardrails. Leaders are being asked to move fast and enable AI adoption, but they know that an over-permitted agent is a breach waiting to happen. Both feelings are rational; the question is how to channel them into a workable AI security program.

Okta's AI security conference gave us all a clear answer: treat AI agents as first-class identities inside your workforce identity stack; give teams visibility, governance, and control that matches what they already use for human users; then add verified human oversight for the moments that carry the most risk.

Nametag Introduces Verified Human Signatures for Agentic AI Actions, Expanded Okta Integration →

Notes on Okta's Main Keynote

Okta CEO Todd McKinnon framed the problem plainly: business teams want the productivity gains that AI agents promise, while security and IT teams see a huge gap between deployment and security controls. Okta is supporting identity teams by applying mature IAM and PAM principles to AI identities so that organizations can see and manage human and non-human users from the same place. The live demo of AI agent governance drew the strongest reaction of the day. Clearly, attendees were ready for a concrete and intuitive way to manage AI agent identities with the same rigor they apply to human identities.

Two elements about Okta’s approach stood out to us:

- A vendor-neutral posture that keeps the decision about AI apps with the customer while Okta focuses on governance and control across them.

- The introduction of Cross App Access, an open protocol designed to standardize how users, agents, and applications connect securely.

Notes from the Show Floor

Whether talking with us at our booth or asking questions in breakout sessions, security and IT leaders all shared the same pressure pattern: business leaders are expecting them to securely enable AI adoption across business units, they understand that agents are being granted access to everything, and they know that if something goes wrong, accountability will land on them.

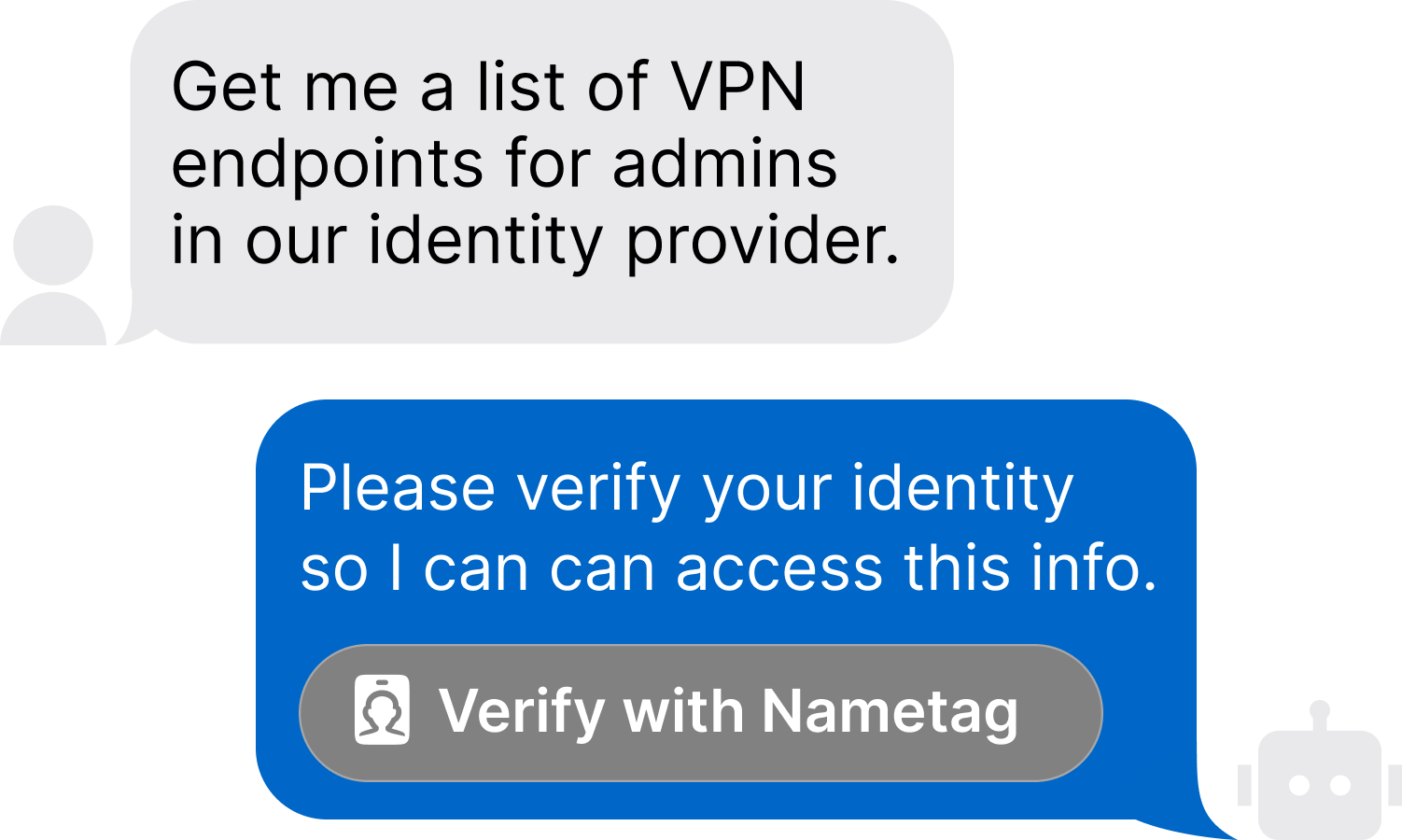

Over and over again, the conversation kept returning to a powerful principle: let agents work freely on low-risk tasks, and require verified human signatures for high-impact decisions.

This is why identity governance for AI agents drew so much interest. Identity teams need visibility into which agents exist, oversight of what they can do, and controls to configure and enforce access policies. And they want an easy way to automatically bring a verified human into the loop on agent actions that can have a material impact, like moving money or approving access requests.

Over and over again, the conversation kept returning to a powerful principle: let agents work freely on low-risk tasks, and require verified human signatures for high-impact decisions.

Key Signals at Oktane 2025

In our view, Oktane 2025 marked a transition in the advancement of agentic AI. Today, AI agents have moved from theory to baseline capability that require a considered security strategy. Three signals are worth calling out in particular:

- AI identity governance is table stakes. Treating AI agents as manageable identities is a baseline requirement for enterprise security programs. Agentic identity controls need to live where IAM teams already operate.

- Interoperability will matter more and more. With more models, tools, and agents entering the stack every day, standardized connection frameworks like Cross App Access can both reduce friction and increase auditability.

- Clarity and functionality are key. Security practitioners are backing concrete approaches that clearly show how policies are enforced, how approvals are captured, and how authentication evidence is produced.

How Nametag is Supporting Okta

Nametag’s new Signa™ solution for Okta made big waves on the show floor.

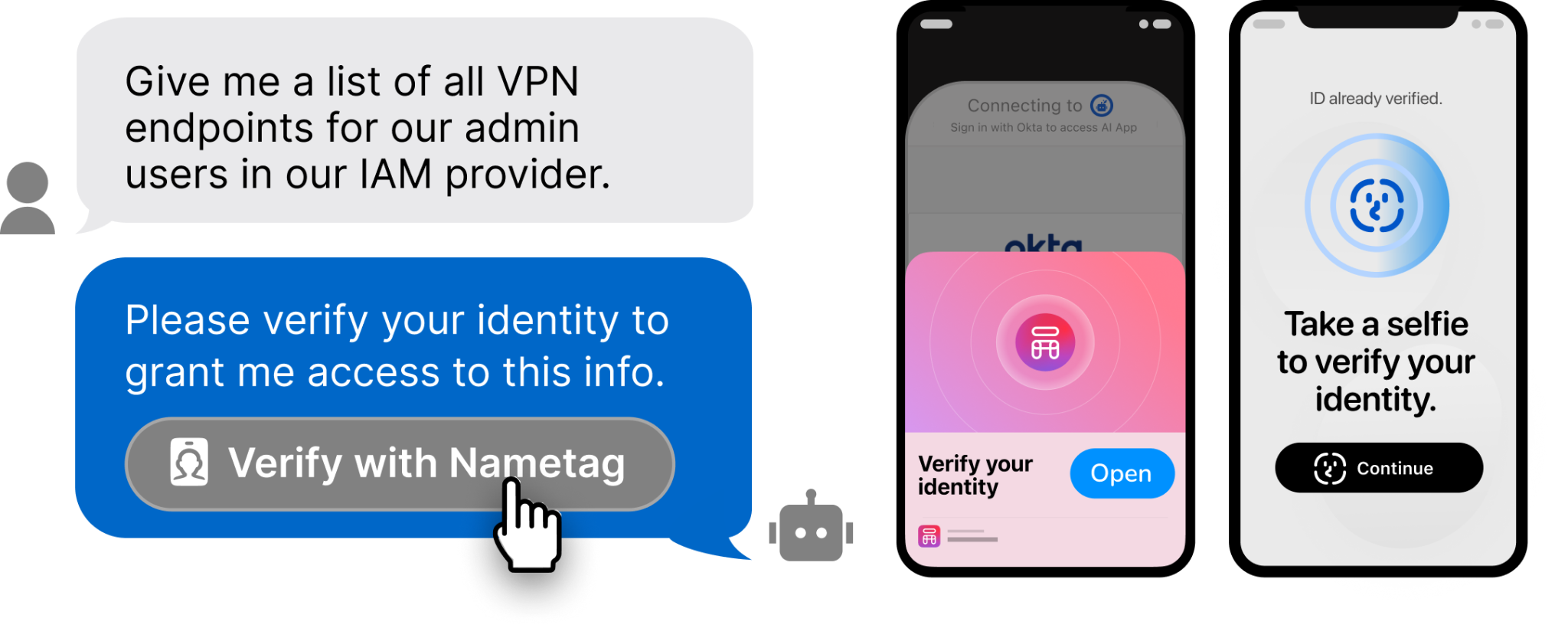

Signa draws on our expanded integration wtih Okta to add verified human signatures to AI-initiated actions, so that sensitive actions can’t execute without the right person approving them. In effect, Okta acts as the policy engine for AI identities; Nametag acts as the high-assurance authentication layer, verifying the human identity behind the AI. The result is proof of verified human oversight of particular AI actions.

This idea resonated throughout the week. Attendees told us that extending Okta identity governance to AI agents is logical and welcomed; but they also want an assurance level for AI that's greater than most MFA can provide.

Signa answers that need by using our Deepfake Defense™ engine to create a cryptographically-attested proof of identity. Through Signa, AI agents can only do things like move funds, alter entitlements, and change records after approval by a verified human. And of course, Signa is more than an idea, it’s a real solution that’s available now and can be implemented today.

Learn more about Nametag Signa for Okta ->

Verified Human Signatures for AI Actions

Consider a finance automation agent that prepares vendor payments. Drafting invoices is low risk; submitting a batch payment is not.

- With governance in place through Okta, the agent can work through collection and reconciliation on its own. When it reaches the point of releasing funds above a threshold, the workflow pauses and requests a verified human signature from the designated approver.

- That signature is collected through Nametag Signa, which verifies that the person approving the payment is the right person.

- The agent continues once the signature is verified. The result is faster processing with a clear approval trail and no guesswork about who allowed what.

The same pattern can be applied to privileged access requests, workforce and customer user account changes, and other “high-assurance” actions. Let agents operate within policy boundaries established and governed in Okta; require a verified human signature via Nametag Signa when the action crosses a risk boundary.

AI Security Checklist for CISOs and IAM Leaders

Security leaders asked us for a concrete next-step list they could take back to their teams. Here is a short checklist that reflects our conversations and learnings at Oktane, designed to help security and IT teams gain visibility, align agentic AI policies with business impact, and reserve human attention for the places it counts.

AI Security Checklist

- Inventory agents. Establish a single source of truth for every AI agent in use, including its purpose, scopes, and data access. Treat this exercise like service account hygiene, but with agent-specific metadata and context.

- Classify actions by risk. Work with stakeholders across your organization to map agent actions to impact tiers. Tie each tier to policy, logging, and approval requirements. Create risk matrices and account for business impact versus security risk.

- Apply governance controls. Use Okta’s governance engine to enforce least privilege for AI agents. Configure and assign appropriate authentication, access and sign-in policies to mitigate risk of over-privileged agents.

- Require verified human signatures for high-risk steps. Use Nametag Signa for Okta tor require designated approvers to verify their identity before sensitive actions can execute. Create an auditable record of the human behind the AI.

Oktane 2025 Summary & Resources

The dread is not irrational. The excitement is justified. Oktane showed a path that lets both feelings coexist in a useful way. Okta is extending governance to the agent world so teams can see and control non-human identities with familiar tools. Nametag is adding verified human signatures to protect the few moments where an unsafe action could change the business.

That combination gives leaders room to move. You can keep agents productive, keep policies consistent, and keep accountability clear.

- Watch Oktane on demand. We particularly recommend Todd McKinnon’s primary keynote and the Okta Platform roadmap presentation. Note that more sessions are being made available on-demand on an ongoing basis.

- Explore Nametag Signa. Learn how verified human signatures fit into your Okta-powered approval flows for AI-initiated actions.

- Talk with our team. Whether you’re mapping your first agent governance program or formalizing an existing one, we can help you align Okta capabilities and Nametag identity verification with your own projects.